Course Overview

Starting on Monday, January 13th, 2025

This workshop is a gentle but in-depth introduction to the field of Data Science. It gives equal emphasis on understanding the theoretical foundations and hands-on experience with real-world data analyses on the Google cloud platform. The workshop spans over 50 hours of in-person training, spanning 12 workday evening sessions across 3 weeks. It comprises in-depth lecture/theory sessions, guided labs for building AI models, quizzes, projects on real-world datasets, guided readings of influential research papers, and discussion groups.

The teaching faculty for this workshop comprises the instructor, a supportive staff of teaching assistants, and a workshop coordinator. Together they facilitate learning through close guidance and 1-1 sessions when needed.

You can attend the workshop in person or remotely. State-of-the-art facilities and instructional equipment ensure that the learning experience is invariant of either choice. Of course, you can also mix the two modes: attend the workshop in person when you can, and attend it remotely when you cannot. All sessions are live-streamed, as well as recorded and available on the workshop portal.

Learning Outcome

You will gain a strong theoretical foundation and practical expertise in Data Science through immersive training, hands-on projects, guided labs, and collaborative learning, with flexible in-person or remote attendance options.

Schedule

| Start Date | MONDAY, JANUARY 13th, 2025 |

|---|---|

| Duration | 3 weeks |

| Session Days | Every Monday, Tuesday, Wednesday, and Thursday |

| Theory Session | Monday and Wednesday (3 hours each) |

| Lab Session | Tuesday and Thursday (3 hours each) |

| Quiz Release | Every Thursday morning |

| Quiz Review | Every Sunday at 4.30 PM PST |

| Guided Paper Reading | Every Sunday at 12:00 PM (noon) PST |

Call us at 1.855.LEARN.AI for more information.

Skills You Will Learn

Data Science Fundamentals, Data Analysis, Artificial Intelligence & Machine Learning

Prerequisites

Target Audience

Syllabus details

Join our Spring Session starting Monday evening, February 3rd, 2025, for a dynamic workshop blending theory and practical application to build a strong foundation in Data Science.

Workshop Highlights

- 50 Hours of Training: Over 12 evening sessions in 3 weeks.

- In-Depth Lectures: Master the core concepts of Data Science.

- Hands-On Labs: Build AI models and analyze real-world datasets.

- Interactive Quizzes & Projects: Test your knowledge and apply it to real-world scenarios.

- Guided Discussions: Explore key research papers and engage in collaborative learning.

We study the covariance between two variables, and its geometrical intuition. Next, we learn about feature standardization, Pearson correlation, and its relationship to linear regression for a single predictor. We also study the phenomenon of regression towards the mean. Correlation does not imply causation: though it is a common fallacy to fall into. We will delve more deeply into this.

We study linear regression, the concept of least-squares, and gradient-descent to minimize the sum-squared errors. Then we will study the ordinary least squares linear regression, polynomial regression and the Runge phenomenon, nonlinear least-squares, and box-cox transformations. We will also learn about residuals analysis and other model diagnostic techniques, and get introduced to alternate loss functions.

Regularization reduces overfitting and high variance. We look at an additional penalty term to the regression loss function as a regularization hyperparameter. Additionally, we see a geometric interpretation of this term along with Minkowski distance. Lasso (L1), Ridge (L2), and Elastic-Net regularizations are covered in the data-science lab exercises.

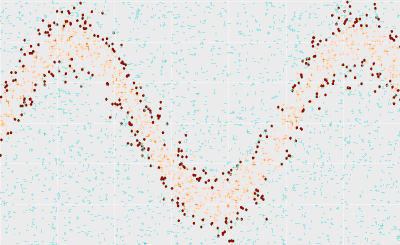

We study three different approaches to clustering of data in the feature space: K-Means clustering and its close variants; selecting the optimal number of clusters through the scree plots Agglomerative (Hierarchical) clustering, dendrogram and various linkage functions Density-based clustering techniques such as dbscan, optics and denclue

We study clustering, starting with the concept of grouping data points using distance metrics like Euclidean and Manhattan. We’ll explore K-Means for minimizing within-cluster variance, hierarchical clustering for building cluster hierarchies, and DBSCAN for density-based clustering and outlier detection. We’ll also cover Gaussian Mixture Models for probabilistic clustering.

We study a few techniques for dimensionality reduction. Primarily we will focus on Principal Component Analysis and understand the geometrical interpretation of it. Along with this we will relate it to the Covariance Matrix, and discuss the class of datasets that PCA works best with. We will also cover such simpler approaches as backward and forward selection and Lasso.

Guided Labs and Projects

The Basics

- Setting up the AI development environment in the Google cloud (Jupyter notebooks, Colab, Kubernetes)

- Introduction to Pandas and Scikit-learn for data manipulation and model diagnostics, respectively

- Creating interactive data-science applications with Streamlit

- Data visualization techniques

- Kubeflow: Model development life cycle

- Models as a service

Google Cloud AI Platform

- GKE (Google Kubernetes Engine)

- Selecting the right compute-instances and containers for deep learning

- Colab and Notebooks in GCP

- Going to production in GCP

- Recommendations AI (if time permits)

Core Topics

- Exploring Numpy and SciPy

- Linear regression with Scikit-Learn

- Model diagnostics with Scikit-Learn and Yellowbrick

- Residual analysis

- Power transforms (Box-Cox, etc.)

- Polynomial regression

- Regularization methods

- Classification with Logistic Regression

- LDA and DQA

- Dimensionality reduction

- Clustering algorithms (k-Means, EM, hierarchical, and density-based methods)

Explainable AI

- Interpretability of AI models

- LIME (Locally Interpretable Model Explanations)

- Shapley additive models

- Partial dependency plots

Ensemble Methods

- Decision trees and pruning

- Bagging and Boosting

- RandomForest and its variants

- Gradient Boosting, XGBoost, CatBoost, etc.

Hyperparameter Optimization

- Grid search

- Randomized search

- Basic introduction to Bayesian optimization

AI Recommendation Systems

(Using Surprise, etc.)

- Memory based recommenders

- Model based recommenders

Teaching Faculty

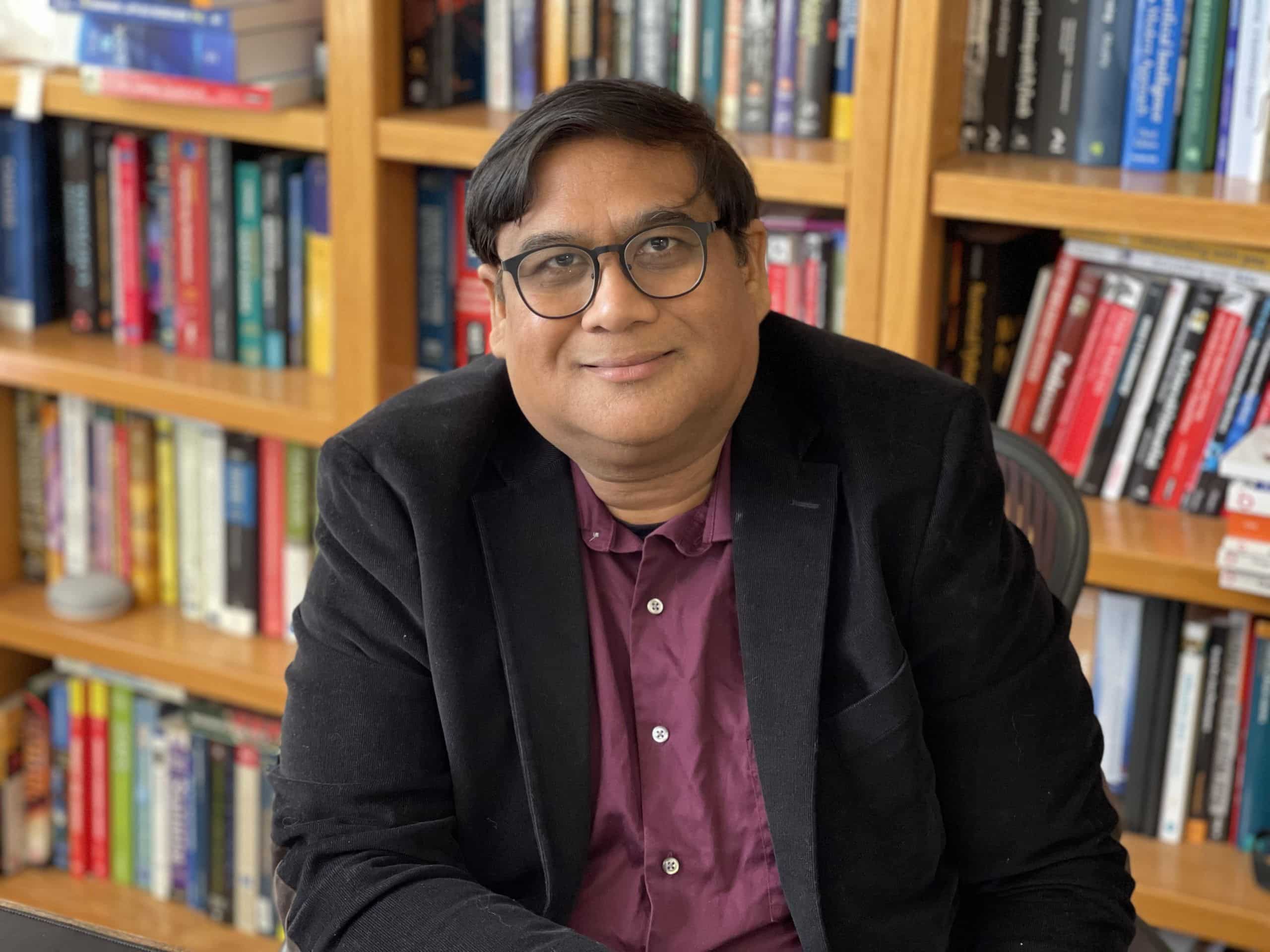

Asif Qamar

Chief Scientist and Educator

Background

Over more than three decades, Asif’s career has spanned two parallel tracks: as a deeply technical architect & vice president and as a passionate educator. While he primarily spends his time technically leading research and development efforts, he finds expression for his love of teaching in the courses he offers. Through this, he aims to mentor and cultivate the next generation of great AI leaders, engineers, data scientists & technical craftsmen.

Educator

He has also been an educator, teaching various subjects in AI/machine learning, computer science, and Physics for the last 32 years. He has taught at the University of California, Berkeley extension, the University of Illinois, Urbana-Champaign (UIUC), and Syracuse University. He has also given a large number of courses, seminars, and talks at technical workplaces. He has been honored with various excellence in teaching awards in universities and technical workplaces.

Chandar Lakshminarayan

Head of AI Engineering

Background

A career spanning 25+ years in fundamental and applied research, application development and maintenance, service delivery management and product development. Passionate about building products that leverage AI/ML. This has been the focus of his work for the last decade. He also has a background in computer vision for industry manufacturing, where he innovated many novel algorithms for high precision measurements of engineering components. Furthermore, he has done innovative algorithmic work in robotics, motion control and CNC.

Educator

He has also been an educator, teaching various subjects in AI/machine learning, computer science, and Physics for the last decade.

Teaching Assistants

Our teaching assistants will guide you through your labs and projects. Whenever you need help or clarification, contact them on the SupportVectors Discord server or set up a Zoom meeting.

Kate Amon

Univ. of California, Berkeley

Shubeeksh K

MS Ramaiah Institute of Technology

Purnendu Prabhat

Kalasalingam Univ.

Harini Datla

Indian Statistical Institute

Kunal Lall

Univ. of Illinois, Chicago

In-Person vs Remote Participation

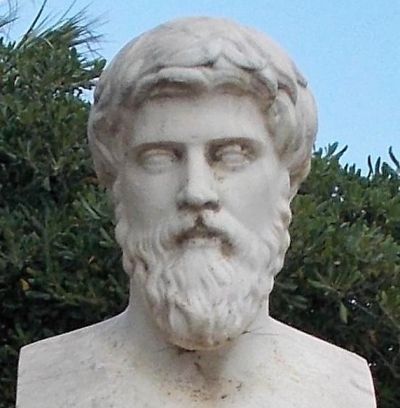

Plutarch

Education is not the filling of a pail, but the lighting of a fire. “For the mind does not require filling like a bottle, but rather, like wood, it only requires kindling to create in it an impulse to think independently and an ardent desire for the truth.

Our Goal: build the next generation of data scientists and AI engineers

The AI revolution is perhaps the most transformative period in our world. As data science and AI increasingly permeate the fabric of our lives, there arises a need for deeply trained scientists and engineers who can be a part of the revolution.

Over 2250+ AI engineers and data scientists trained

- Instructors with over three decades of teaching excellence and experience at leading universities.

- Deeply technical architects and AI engineers with a track record of excellence.

- More than 30 workshops and courses are offered

- This is a state-of-the-art facility with over a thousand square feet of white-boarding space and over ten student discussion rooms, each equipped with state-of-the-art audio-video.

- 20+ research internships finished.

Where technical excellence meets a passion for teaching

There is no dearth of technical genius in the world; likewise, many are willing and engaged in teaching. However, it is relatively rare to find someone who has years of technical excellence, proven leadership in the field, and who is also a passionate and well-loved teacher.

SupportVectors is a gathering of such technical minds whose courses are a crucible for in-depth technical mastery in this very exciting field of AI and data science.

A personalized learning experience to motivate and inspire you

Our teaching faculty will work closely with you to help you make progress through the courses. Besides the lecture sessions and lab work, we provide unlimited one-on-one sessions to the course participants, community discussion groups, a social learning environment in our state-of-the-art facility, career guidance, interview preparation, and access to our network of SupportVectors alumni.

Join over 2000 professionals who have developed expertise in AI/ML

Become Part of SupportVectors to Inculcate In-depth Technical Abilities and Further Your Career.