Course Overview

Starting on Sunday Morning, Feb 1, 2026

AI Agents Bootcamp is an intensive 12-week program designed to equip AI professionals with cutting-edge skills in Multi AI Agents System creation and execution. This dual-phase course combines theory with hands-on practice, empowering engineers to excel in building and optimizing advanced AI Agent applications for real-world challenges. It comprises in-depth lecture/theory sessions, guided labs for building AI models, quizzes, projects on real-world datasets, guided readings of influential research papers, and discussion groups.

The teaching faculty for this workshop comprises the instructor, a supportive staff of teaching assistants, and a workshop coordinator. Together they facilitate learning through close guidance and 1-1 sessions when needed.

You can attend the workshop in person or remotely. State-of-the-art facilities and instructional equipment ensure that the learning experience is invariant of either choice. Of course, you can also mix the two modes: attend the workshop in person when you can, and attend it remotely when you cannot. All sessions are live-streamed, as well as recorded and available on the workshop portal.

Learning Outcome

By the end of the AI Agent Bootcamp, participants will master the core design patterns to engineer effective, practical, real-world AI agents driven applications. You will have learned techniques to build and optimize high-performance and robust AI agentic solutions. You will gain hands-on experience through labs and real-world use-case driven projects, developing the skills and confidence to solve complex challenges and showcase a strong portfolio.

Schedule

| Start Date | SUNDAY, Feb 1, 2026 |

|---|---|

| Duration | 12 weeks |

| Session days | Every Sundays |

| Session timing | 11 AM PST to Evening |

| Session type | In-person/Remote |

| Morning sessions | Theory/Paper Readings |

| Evening sessions | Lab/Presentation |

| LAB Walkthrough |

Wednesday, 7 PM to 10 PM (Summary and Quiz) Thursday, 7 PM to 10 PM (Lab) |

Call us at 1.855.LEARN.AI for more information.

Skills You Will Learn

Advanced NLP and LLM Expertise, Skills in fine-tuning and optimizing transformer-based models , Specialized in Prompting Methods.

Prerequisites

- Core concepts and foundational definitions of AI Agents

- Why AI Agents matter: Core value proposition and industry significance

- Differences between traditional automation and agentic systems

- Detailed analysis of successful AI agent deployments

- Industry-specific use cases: Logistics, Healthcare, Customer Service, and more

- Common pitfalls and lessons learned

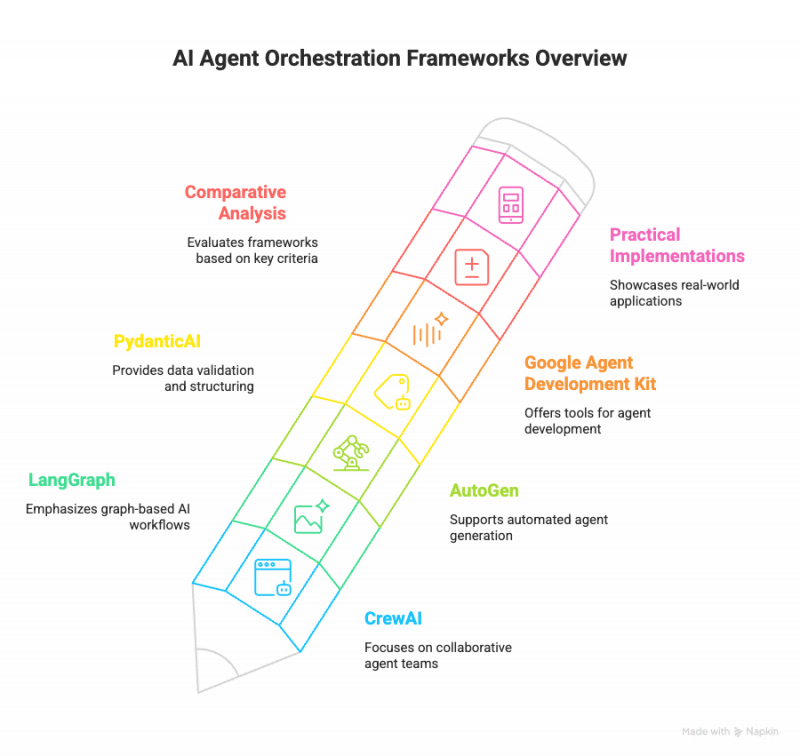

- Deep-dive into frameworks: CrewAI, LangGraph, AutoGen, PydanticAI, Google Agent DevelopmentKit, and more

- Comparative analysis and selection criteria

- Hands-on sessions and practical implementations

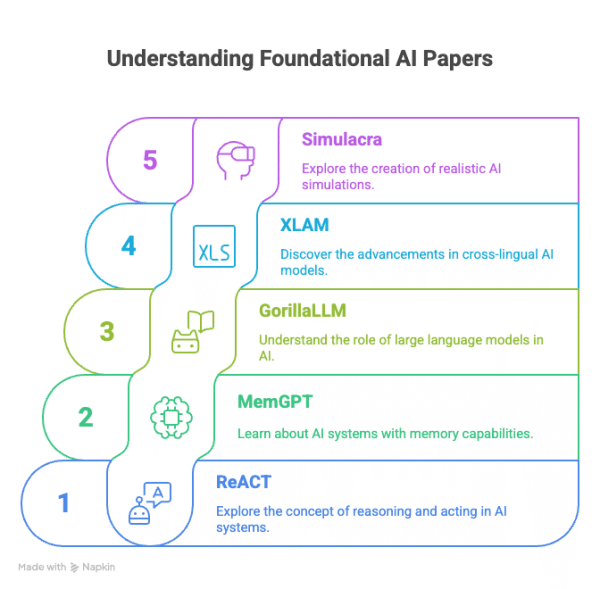

We will go through a guided reading of some of the foundational papers in this field

- Understanding MCP tools and ecosystem integration

- Designing effective MCP prompts, tools, and resources

- Interactive labs: MCP integration exercises

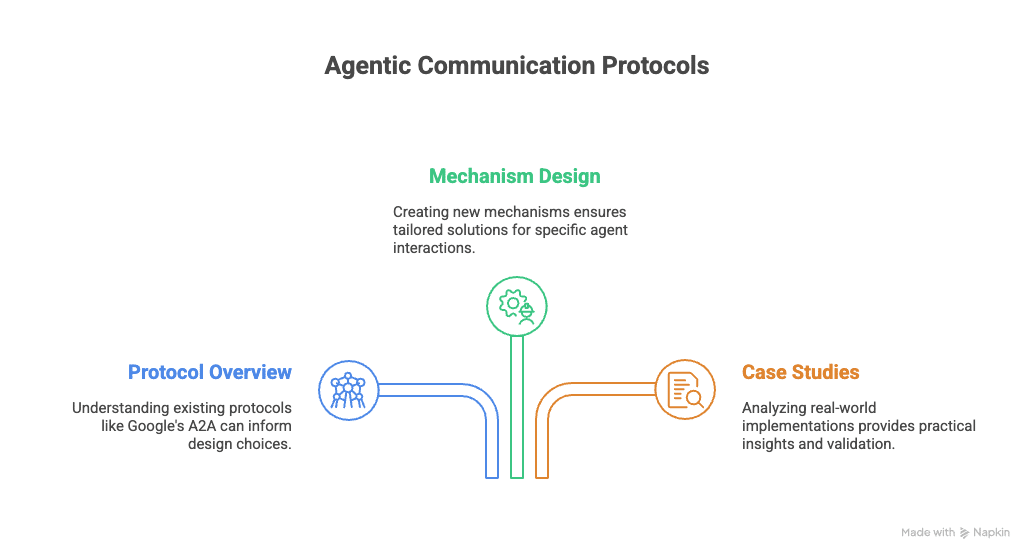

- Protocols overview, including Google’s A2A

- Designing robust communication mechanisms for agents

- Real-world implementation case studies

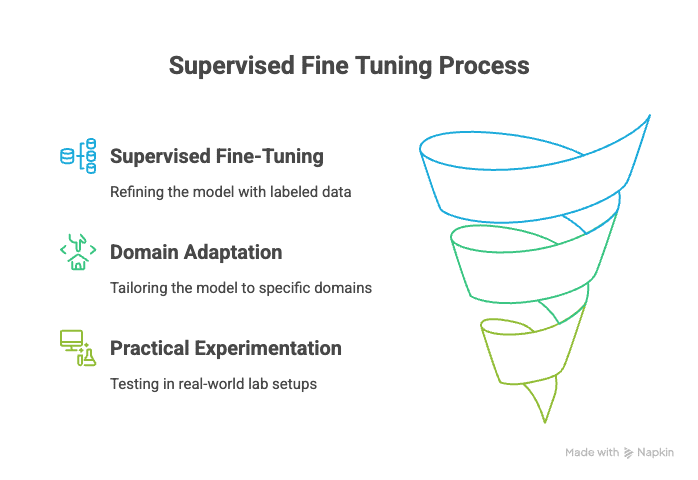

- Techniques in supervised fine-tuning (SFT) of Large Language Models (LLMs)

- Domain-specific adaptation strategies

- Practical fine-tuning labs

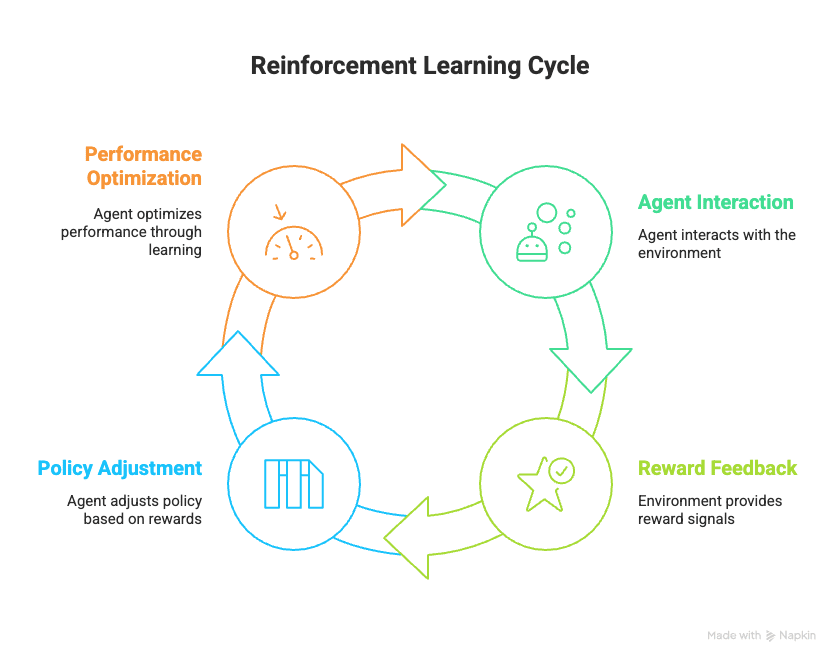

- Core RL concepts: rewards, policy iteration, value iteration

- Exploration vs. exploitation strategies

- Interactive RL exercises

- RL-based fine-tuning methods specific to agent models

- Performance optimization using RL

- Hands-on RL training in simulated environments

- Challenges and strategies in MARL

- Agent cooperation and competition dynamics

- Collaborative and competitive multi-agent systems labs

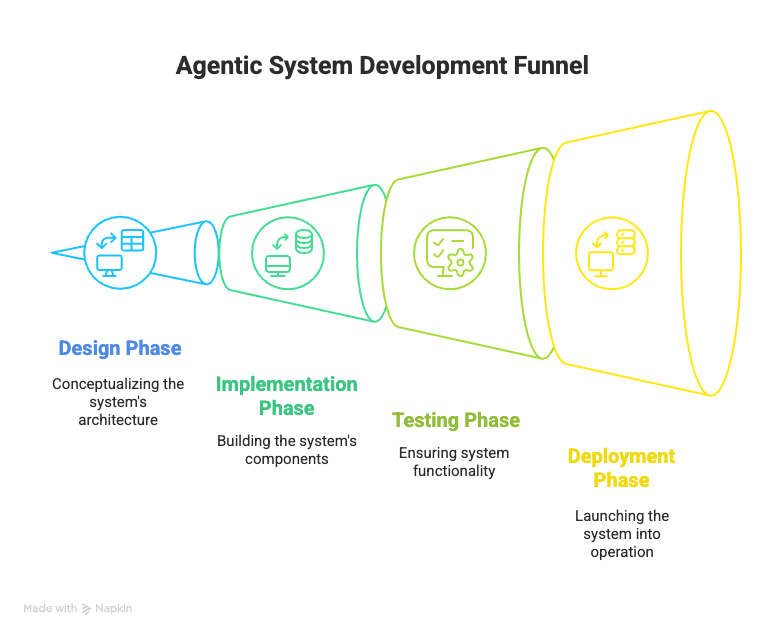

- Comprehensive agentic system development and presentations

- Emerging trends: interpretability, scalability, ethics

- Resources for continued learning and exploration